Today, We are going to solve a Dynamic Programming problem, and we will understand the fundamentals of Brute force and memoization.

Problem Statement:- You are climbing a staircase, and it takes n steps to reach the top, Each time you can either take 1 or 2 steps. What we want to know is, In how many distinct ways we can climb to the top?.

Example 1: If we want to climb two steps, so in how many ways we can climb. We have 2 options to climb.

Option 1:- 1 Step + 1 Step

Option 2:- 2 Steps

|

| Example 1 |

Example 2: If we want to climb three steps, then we have three options to climb to the top

Option 1 Red: 1 Step + 1 Step + 1 Step

Option 2 Black: 1 Step + 2 Steps

Option 3 Green: 2 Steps + 1 Step

|

| Example 2 |

So, In the above examples, We can see how many steps are required to climb 2 or 3 stairs, but it is hard to visualize, Let's try to visualize it in more ways by using a

Decision Tree.

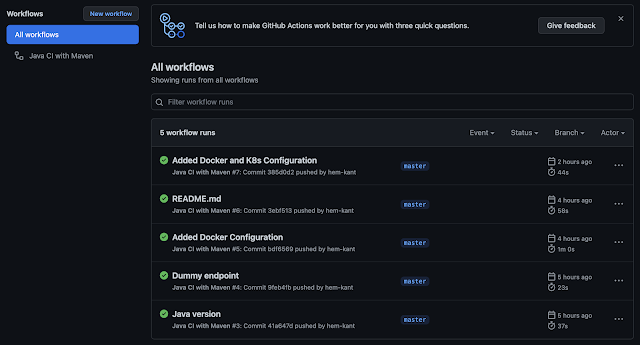

Let's take n=5, where n is the number of stairs we want to climb. Using Brute Force Algorithm we are going to make decisions.

So we are starting at step zero and our goal is to reach step 5, and we can climb 1 step or 2 steps at a time, and depending on the decision we take, each outcome will lead to a different path. And we will continue to make 2 decisions at every step, and count how many steps were we able to take to reach 5. We are going to solve this recursively.

As we can see "GREEN" 8 different paths to reach 5 and we are going to ignore the RED one. When we started the problem we are going to reach stair 5 starting from stair 0, but we had a sub-problem when we reached step 1 how many ways to reach 5. as we can see in the below Decision Tree.

So, If we are going to solve this problem like this, basically using recursion and probably using DFS (Depth- First Search),

What would be the Time-complexity of that.? because there will be two decisions each time, it is going to (2^n).

In the below screenshot, in the red block section, they both look exactly the same, because we are solving the same sub-problem and because we are using DFS the one at the LEFT will be solved first, so why don't we save the result of that.

and when reaching RIGHT, we can say we already know the result and we don't have to draw the entire decision tree.

Now, if we look at the other Sub-Problem which is starting at step 3, we are asking the same question how many steps to reach 5. In this case, also, the LEFT one will end up computing first, and we don't need the RIGHT side one, so let's just eliminate that.

Let's look if we have another sub-problem, Yes at step 4, which is also exactly the same decision tree and one at the LEFT will compute first so we eliminate the RIGHT one.

After eliminating all our repeated our, the Decision Tree will end up looking like this, and this is roughly O(n), a liner time solution where we are solving only a sub-problem once.

Where the original problem started at 0, then 1, then 2, then 3, then 4 and finally 5, This is basically DP(Dynamic Programming) where we are caching (Memoization)

THE MOST interesting part is, We now know the path to reach 5 which is our base case, There is another way to solve this by starting from the bottom which means instead of starting from Step 1 we will start computing from the TOP.

In this approach, We will start from 5 to 1.

When we are at 5 which is our step 1,

- Then from 4 to 5, we just need to take one more step, which is 1.

- Next is step 3, here we can take 1 step or 2 steps to reach 5. which means we can take 2 steps in total.

- Next From 2, If we take one step and go to 3 and since we already computed how many steps it would take from 3 to reach 5, which is 2, so we can add that and the total number of steps need to take is 1 step+2 steps = 3 Steps.

- If we notice and are familiar with Fibonacci numbers, that is exactly what we are doing. 😃

and we have to loop n-1 to compute all the values to get the result. Now let's do some coding, and see how much code we need to right to solve this problem.

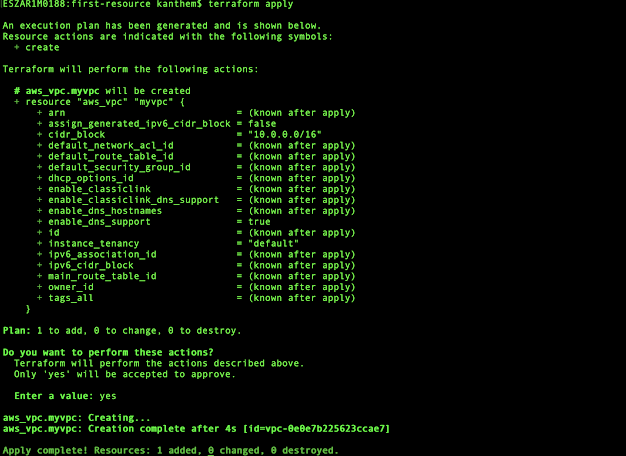

package com.hemkant.DSA;

public class ClimbingStairs {

public static void main(String[] args)

{

System.out.println("Number of ways = " + findSteps(5));

}

static int findSteps(int n)

{

int temp;

int num1 =1, num2=1;

for (int i=0;i<(n-1);i++)

{

temp=num1;

num1=num1+num2;

num2=temp;

System.out.println(num2);

}

return num1;

}

}

Yes, that's all we need to do to solve this problem.

Code Repo

Happy Coding and Keep Sharing!!