In my current project we are using SDL WEB 8.1 and DXA 1.5 ,client come up with the new request and wants to read all the items in CMS in english only, In the current implementation we have multiple locales, changing the component title to english from other multilingual language title is not the big challenge but when its come to changing the SG and Page name to english for multilingual site is an issue, because we are using DXA default implementation to render Sitemap,Breadcrumb and Navigation.

Client Requirement:- Client wants to read name of all the items in CMS in english, for content editor point of view and at the same time we need to manage the Navigation,Sitemap and Breadcrumb for the multilingual website.

One solution is that,we write our own custom TBB and push the output ,but its a lengthy process and time taking as well.What I did is I made the changes in the existing TBB code and its working fine.

Let's have a look

Changes required in the existing TBB and in CMS:- Create a common Metadata schema and add link component field assign it to Structure group and Page.Update linked component field(s) value.Then, we need to update the existing SitemapItemData.cs model and add new property project name Sdl.Web.DataModel. Next is go to the GenerateSiteMap.cs file you will find this file in project Sdl.Web.Tridion.Templates and populate the value by reading the Metadata returned by the Tridion.ContentManager for StructureGroup and Page type.

How to debug the TBB:- There is a complete article available on SDL docx on how to debug the TBB.

How to upload the updated TBB:- You need to use TcmUploadAssembly.exe to upload the TBB this will ask you DLL source,CMS url,Item folder location(tcmID),userId and Password.I have created a batch file for this.

Pre-Requisites:- To build the TBB code you are required to add reference of following DLLs.

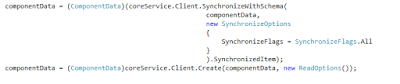

Once all the above changes are done you have successfully updated the TBB and added new field in Navigation.JSON ,now we need to update the code at CD side in DXA.

You need to update the following files in order to read the new field.

Happy Coding and Keep Sharing !!!

Client Requirement:- Client wants to read name of all the items in CMS in english, for content editor point of view and at the same time we need to manage the Navigation,Sitemap and Breadcrumb for the multilingual website.

One solution is that,we write our own custom TBB and push the output ,but its a lengthy process and time taking as well.What I did is I made the changes in the existing TBB code and its working fine.

Let's have a look

- Approach I followed.

- Changes required in the existing TBB and in CMS.

- How to debug the TBB.

- How to upload the updated TBB in CMS.

- And some Pre-requisites.

Approach I followed:- I decide to create a common metadata schema and have linked component attached in it for both page and structure group and will read component value in the Generate Sitemap TBB, we can always made code tweaks in the existing one.,rather than creating new TBB.

Changes required in the existing TBB and in CMS:- Create a common Metadata schema and add link component field assign it to Structure group and Page.Update linked component field(s) value.Then, we need to update the existing SitemapItemData.cs model and add new property project name Sdl.Web.DataModel. Next is go to the GenerateSiteMap.cs file you will find this file in project Sdl.Web.Tridion.Templates and populate the value by reading the Metadata returned by the Tridion.ContentManager for StructureGroup and Page type.

How to debug the TBB:- There is a complete article available on SDL docx on how to debug the TBB.

How to upload the updated TBB:- You need to use TcmUploadAssembly.exe to upload the TBB this will ask you DLL source,CMS url,Item folder location(tcmID),userId and Password.I have created a batch file for this.

| TBB Upload |

Pre-Requisites:- To build the TBB code you are required to add reference of following DLLs.

- Tridion.Common

- Tridion.ContentManager

- Tridion.ContentManager.Common

- Tridion.ContentManager.Publishing

- Tridion.ContentManager.Templating

- Tridion.ExternalContentLibrary

- Tridion.ExternalContentLibrary.V2

- Tridion.Logging

- Tridion.TopologyManager.Client

- Microsoft.OData.Client

Once all the above changes are done you have successfully updated the TBB and added new field in Navigation.JSON ,now we need to update the code at CD side in DXA.

You need to update the following files in order to read the new field.

- Update the Link Model at Sdl.Web.Common.Models

- Update the SitemapItem Model at Sdl.Web.Common.Models both are available in DXA framework .

- Now, we need to assign the value in the updated model go to CreateLink() method at Sdl.Web.Tridion Class name DefaultProvider.cs and assign the new property.

- Build your code and Run.

- Debug Breadcrumb.CHTML and TopNavigation.CHTML page you will have new field value in the model returned.

Happy Coding and Keep Sharing !!!