In my previous blog, we saw how Published Summary plugin was conceptualized, its use cases and how it works and its different functionalities.

In this Plugin, I wrote the C# code that interacts with Core Service and forwards the JSON response to be consumed on FrontEnd.

Based on the use cases and feature of this plugin I wrote the Endpoints on the following scenarios and provided the JSON.

Publish and UnPublish Items

The Publish and UnPublish method is the POST request method and deserialized JSON into C# Model.

{"TcmIds":[{Id:"tcm:14-65-4",Target:"staging"},{Id:"tcm:14-77-64",Target:"staging"}]}

Summary Panel

The GetSummaryPanelData method is the POST request method and deserialized JSON in C# modal.

JSON format is {'IDs':['tcm:14-65-4']}

Get All Publication and Target Type

You can download the code from the Github.

Happy Coding and Keep Sharing !!!

In this Plugin, I wrote the C# code that interacts with Core Service and forwards the JSON response to be consumed on FrontEnd.

Based on the use cases and feature of this plugin I wrote the Endpoints on the following scenarios and provided the JSON.

- Get All Published Items from Publication, Structure Group, and Folder.

- Let's say if we select Publication then the response will be all Published Pages, Components, and Categories in that publication.

- If we select this plugin from Structure group, then we will have all the published pages in the structure group and same goes for Folder selection all Published ComponentTemplates and Components.

- Publish Items that are selected by the user from the Plugin UI.

- Unpublish the Items that are select by the user from the Plugin UI.

- Summary Panel, where we'll have a Snapshot of how many items are published in a particular publication, basically a GroupBy of itemsType with the count.

- And Finally, Get all Publication and Target Type.

Now, Let's look at the code at High Level

Get All Published Item

The GetAllPublishedItems method is the POST request method and deserialized JSON in C# modal.

JSON format is {'IDs':['tcm:14-65-4']}

The GetAllPublishedItems method is the POST request method and deserialized JSON in C# modal.

JSON format is {'IDs':['tcm:14-65-4']}

[HttpPost, Route("GetAllPublishedItems")]

public object GetAllPublishedItems(TcmIds tcmIDs)

{

GetPublishedInfo getFinalPublishedInfo = new GetPublishedInfo();

var multipleListItems = new List<ListItems>();

XmlDocument doc = new XmlDocument();

try

{

foreach (var tcmId in tcmIDs.IDs)

{

TCM.TcmUri iTcmUri = new TCM.TcmUri(tcmId.ToString());

XElement listXml = null;

switch (iTcmUri.ItemType.ToString())

{

case CONSTANTS.PUBLICATION:

listXml = Client.GetListXml(tcmId.ToString(), new RepositoryItemsFilterData

{

ItemTypes = new[] { ItemType.Component, ItemType.ComponentTemplate, ItemType.Category, ItemType.Page },

Recursive = true,

BaseColumns = ListBaseColumns.Extended

});

break;

case CONSTANTS.FOLDER:

listXml = Client.GetListXml(tcmId.ToString(), new OrganizationalItemItemsFilterData

{

ItemTypes = new[] { ItemType.Component, ItemType.ComponentTemplate },

Recursive = true,

BaseColumns = ListBaseColumns.Extended

});

break;

case CONSTANTS.STRUCTUREGROUP:

listXml = Client.GetListXml(tcmId.ToString(), new OrganizationalItemItemsFilterData()

{

ItemTypes = new[] { ItemType.Page },

Recursive = true,

BaseColumns = ListBaseColumns.Extended

});

break;

case CONSTANTS.CATEGORY:

listXml = Client.GetListXml(tcmId.ToString(), new RepositoryItemsFilterData

{

ItemTypes = new[] { ItemType.Category },

Recursive = true,

BaseColumns = ListBaseColumns.Extended

});

break;

default:

throw new ArgumentOutOfRangeException();

}

if (listXml == null) throw new ArgumentNullException(nameof(listXml));

doc.LoadXml(listXml.ToString());

multipleListItems.Add(TransformObjectAndXml.Deserialize<ListItems>(doc));

}

return getFinalPublishedInfo.FilterIsPublishedItem(multipleListItems).SelectMany(publishedItem => publishedItem, (publishedItem, item) => new { publishedItem, item }).Select(@t => new { @t, publishInfo = Client.GetListPublishInfo(@t.item.ID) }).SelectMany(@t => getFinalPublishedInfo.ReturnFinalList(@t.publishInfo, @t.@t.item)).ToList();

}

catch (Exception ex)

{

throw new HttpResponseException(Request.CreateErrorResponse(HttpStatusCode.InternalServerError, ex.Message));

}

}

Publish and UnPublish Items

The Publish and UnPublish method is the POST request method and deserialized JSON into C# Model.

{"TcmIds":[{Id:"tcm:14-65-4",Target:"staging"},{Id:"tcm:14-77-64",Target:"staging"}]}

#region Publishe the items

/// <summary>

/// Publishes the items.

/// </summary>

/// <param name="IDs">The i ds.</param>

/// <returns>System.Int32.</returns>

/// <exception cref="ArgumentNullException">result</exception>

[HttpPost, Route("PublishItems")]

public string PublishItems(PublishUnPublishInfoData IDs)

{

try

{

var pubInstruction = new PublishInstructionData()

{

ResolveInstruction = new ResolveInstructionData() { IncludeChildPublications = false },

RenderInstruction = new RenderInstructionData()

};

PublishTransactionData[] result = null;

var tfilter = new TargetTypesFilterData();

var allPublicationTargets = Client.GetSystemWideList(tfilter);

if (allPublicationTargets == null) throw new ArgumentNullException(nameof(allPublicationTargets));

foreach (var pubdata in IDs.IDs)

{

var target = allPublicationTargets.Where(x => x.Title == pubdata.Target).Select(x => x.Id).ToList();

if (target.Any())

{

result = Client.Publish(new[] { pubdata.Id }, pubInstruction, new[] { target[0] }, PublishPriority.Normal, null);

if (result == null) throw new ArgumentNullException(nameof(result));

}

}

return "Item send to Publish";

}

catch (Exception ex)

{

throw new HttpResponseException(Request.CreateErrorResponse(HttpStatusCode.InternalServerError, ex.Message));

}

}

#endregion

#region Unpublish the items

/// <summary>

/// Uns the publish items.

/// </summary>

/// <param name="IDs">The i ds.</param>

/// <returns>System.Object.</returns>

/// <exception cref="ArgumentNullException">result</exception>

/// <exception cref="HttpResponseException"></exception>

[HttpPost, Route("UnPublishItems")]

public string UnPublishItems(PublishUnPublishInfoData IDs)

{

try

{

var unPubInstruction = new UnPublishInstructionData()

{

ResolveInstruction = new ResolveInstructionData()

{

IncludeChildPublications = false,

Purpose = ResolvePurpose.UnPublish,

},

RollbackOnFailure = true

};

PublishTransactionData[] result = null;

var tfilter = new TargetTypesFilterData();

var allPublicationTargets = Client.GetSystemWideList(tfilter);

if (allPublicationTargets == null) throw new ArgumentNullException(nameof(allPublicationTargets));

foreach (var tcmID in IDs.IDs)

{

var target = allPublicationTargets.Where(x => x.Title == tcmID.Target).Select(x => x.Id).ToList();

if (target.Any())

{

result = Client.UnPublish(new[] { tcmID.Id }, unPubInstruction, new[] { target[0] }, PublishPriority.Normal, null);

if (result == null) throw new ArgumentNullException(nameof(result));

}

}

return "Items send for Unpublish";

}

catch (Exception ex)

{

throw new HttpResponseException(Request.CreateErrorResponse(HttpStatusCode.InternalServerError, ex.Message));

}

}

#endregion

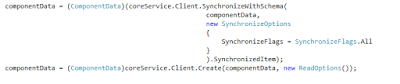

Summary Panel

The GetSummaryPanelData method is the POST request method and deserialized JSON in C# modal.

JSON format is {'IDs':['tcm:14-65-4']}

#region Get GetSummaryPanelData

/// <summary>

/// Gets the analytic data.

/// </summary>

/// <returns>System.Object.</returns>

[HttpPost, Route("GetSummaryPanelData")]

public object GetSummaryPanelData(TcmIds tcmIDs)

{

try

{

GetPublishedInfo getFinalPublishedInfo = new GetPublishedInfo();

var multipleListItems = new List<ListItems>();

XmlDocument doc = new XmlDocument();

foreach (var tcmId in tcmIDs.IDs)

{

var listXml = Client.GetListXml(tcmId.ToString(), new RepositoryItemsFilterData

{

ItemTypes = new[] { ItemType.Component, ItemType.ComponentTemplate, ItemType.Category, ItemType.Page },

Recursive = true,

BaseColumns = ListBaseColumns.Extended

});

if (listXml == null) throw new ArgumentNullException(nameof(listXml));

doc.LoadXml(listXml.ToString());

multipleListItems.Add(TransformObjectAndXml.Deserialize<ListItems>(doc));

}

List<Item> finalList = new List<Item>();

foreach (var publishedItem in getFinalPublishedInfo.FilterIsPublishedItem(multipleListItems))

foreach (var item in publishedItem)

{

var publishInfo = Client.GetListPublishInfo(item.ID);

foreach (var item1 in getFinalPublishedInfo.ReturnFinalList(publishInfo, item)) finalList.Add(item1);

}

IEnumerable<Analytics> analytics = finalList.GroupBy(x => new { x.PublicationTarget, x.Type }).Select(g => new Analytics { Count = g.Count(), PublicationTarget = g.Key.PublicationTarget, ItemType = g.Key.Type, });

var tfilter = new TargetTypesFilterData();

List<ItemSummary> itemssummary = getFinalPublishedInfo.SummaryPanelData(analytics, Client.GetSystemWideList(tfilter));

return itemssummary;

}

catch (Exception ex)

{

throw new HttpResponseException(Request.CreateErrorResponse(HttpStatusCode.InternalServerError, ex.Message));

}

}

#endregion

Get All Publication and Target Type

#region Get list of all publications

/// <summary>

/// Gets the publication list.

/// </summary>

/// <returns>List<Publications>.</returns>

[HttpGet, Route("GetPublicationList")]

public List<Publications> GetPublicationList()

{

GetPublishedInfo getPublishedInfo = new GetPublishedInfo();

XmlDocument publicationList = new XmlDocument();

PublicationsFilterData filter = new PublicationsFilterData();

XElement publications = Client.GetSystemWideListXml(filter);

if (publications == null) throw new ArgumentNullException(nameof(publications));

List<Publications> publicationsList = getPublishedInfo.Publications(publicationList, publications);

return publicationsList;

}

#endregion

#region Get List of all publication targets

/// <summary>

/// Gets the publication target.

/// </summary>

/// <returns>System.Object.</returns>

[HttpGet, Route("GetPublicationTarget")]

public object GetPublicationTarget()

{

var filter = new TargetTypesFilterData();

var allPublicationTargets = Client.GetSystemWideList(filter);

if (allPublicationTargets == null) throw new ArgumentNullException(nameof(allPublicationTargets));

return allPublicationTargets;

}

#endregion

You can download the code from the Github.

Happy Coding and Keep Sharing !!!